What is Perplexity in Research: - In the field of modern research, particularly within Natural Language Processing (NLP) and machine learning, the term "perplexity" is more than just a synonym for confusion. It is a well-defined statistical measurement that plays a vital role in evaluating how well a probability model or language model predicts a given sample of data. Whether you're building a chatbot, summarizing texts, or working on text classification, understanding perplexity can offer deep insights into your model’s performance.

This article will guide you through the core concept of perplexity, its mathematical formulation, its applications in real-world research, and its limitations. Let’s dive deep into the concept and uncover how perplexity shapes the way researchers and engineers build language-based systems.

What is Perplexity in Research?

Perplexity, in its simplest form, measures how well a probability model predicts a sequence of words. The lower the perplexity, the better the model’s ability to predict text or language data. In other words, a lower perplexity score indicates a more accurate language model.

In the context of research, especially computational linguistics, perplexity is frequently used to assess:

- The predictive performance of language models

- Comparative analysis between different NLP algorithms

- The training progress of a model over time

- Model optimization during hyperparameter tuning

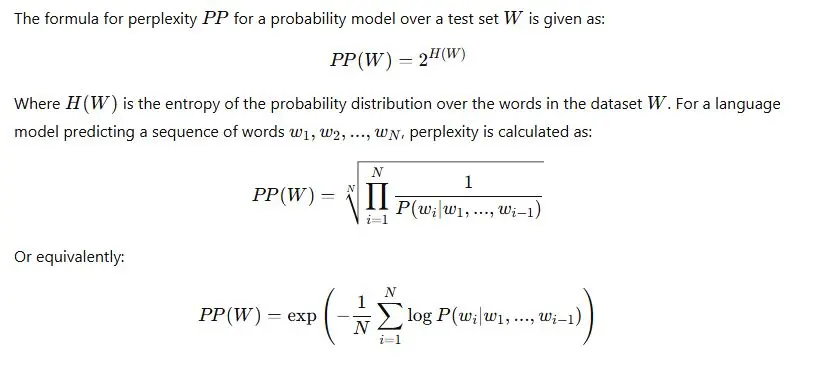

Mathematically, perplexity is derived from entropy, a fundamental concept in information theory introduced by Claude Shannon. Entropy measures the uncertainty or randomness in a system, and perplexity transforms this into a more interpretable metric.

Mathematical Definition of Perplexity

Key Points:

- Lower perplexity = better prediction

- It assumes that a perfect model will have a perplexity of 1

- Higher perplexity means more surprise or uncertainty

Where is Perplexity Used in Research?

1. Natural Language Processing (NLP)

In NLP, language models like GPT, BERT or LLaMA are trained to predict sequences of text. Researchers use perplexity as a core metric during training and testing phases to evaluate how well the model understands the structure of a language.

Applications in NLP:

- Speech recognition systems

- Machine translation (e.g., English to Hindi)

- Autocompletion and text generation

- Sentiment analysis and topic modeling

2. Machine Learning Model Evaluation

In general machine learning research, perplexity is used to:

- Compare generative models (e.g., LDA for topic modeling)

- Evaluate unsupervised learning algorithms

- Measure the diversity and accuracy of predicted output sequences

3. Information Retrieval and Text Mining

In information retrieval systems, perplexity helps measure:

- The efficiency of information modeling

- The coherence of generated summaries or reports

- User behavior prediction based on query logs

Entropy and Perplexity: What's the Connection?

Perplexity and entropy are closely related. Entropy measures the expected amount of information (or uncertainty), while perplexity translates that uncertainty into a base-2 logarithmic scale to express how "confused" the model is.

Example:

If a language model assigns equal probabilities to 4 words, the entropy is 2 bits, and the perplexity is 22=42^2 = 422=4. That means the model is as "confused" as if it had to choose uniformly between 4 options.

How to Interpret Perplexity Scores

| Perplexity Value | Interpretation |

|---|---|

| 1 | Perfect prediction |

| 20-50 | Good performance (context-dependent) |

| 100+ | Model is confused or poorly trained |

| ∞ (infinity) | Random or completely uncertain prediction |

Key Insight:

Perplexity does not have an absolute scale. It's always relative and depends on:

- Vocabulary size

- Dataset complexity

- Training data quality

Advantages of Using Perplexity in Research

- Simple to calculate

- Interpretable metric

- Applicable to different models and datasets

- Useful for model comparison

- Sensitive to data structure (e.g., sentence syntax, grammar)

Limitations of Perplexity

Despite its popularity, perplexity has several limitations in research and production environments.

1. Vocabulary Bias

Perplexity can be misleading if the model’s vocabulary differs significantly between training and testing datasets.

2. Not Always Correlated with Human Judgment

A model with lower perplexity doesn't always produce semantically or grammatically correct sentences.

3. Overfitting Danger

Overly optimized models might "cheat" the perplexity metric without generalizing well on unseen data.

4. Data Dependency

Different datasets (e.g., Wikipedia vs. Twitter) will naturally produce different perplexity values, making direct comparison invalid unless conditions are controlled.

Perplexity vs Accuracy: What’s the Difference?

| Feature | Perplexity | Accuracy |

|---|---|---|

| Type | Probabilistic | Classification-based |

| Output Range | 1 to ∞ | 0 to 1 |

| Use Case | Language models, sequence prediction | Supervised tasks (e.g., spam detection) |

| Measurement | Based on log probability | Based on correct predictions |

| Sensitive To | Entire distribution | Only most probable output |

Perplexity in Modern AI Research (LLMs & Transformers)

With the rise of Large Language Models (LLMs) like GPT-4, Claude, PaLM, and Mistral, perplexity continues to be an important benchmark.

Example:

- GPT models often report validation perplexity after every training epoch

- Perplexity helps tune model depth, attention heads and learning rate

- Researchers correlate perplexity trends with downstream task accuracy

In benchmark datasets like WikiText-103 or Penn Treebank, perplexity reduction is often the first sign of effective model training.

Improving Perplexity: Research Strategies

If you're working on a research project and your model’s perplexity is too high, consider the following:

- Clean Your Dataset – Remove irrelevant or noisy data

- Increase Training Epochs – Let the model learn deeper

- Use Tokenization Efficiently – Apply subword units (e.g., Byte-Pair Encoding)

- Optimize Hyperparameters – Batch size, learning rate, dropout

- Try Transformer Models – They generally achieve lower perplexity than RNNs or LSTMs

Tools for Calculating Perplexity

Popular NLP frameworks that support perplexity calculations:

| Tool | Use Cases |

|---|---|

| TensorFlow | Deep learning-based models |

| PyTorch | Custom language modeling |

| HuggingFace | Pretrained LLMs with perplexity scoring |

| NLTK | Simple n-gram models |

| OpenAI API | Perplexity evaluation of GPT outputs |

Conclusion

Perplexity is a powerful yet nuanced metric used extensively in the research world, especially within the domains of natural language processing, machine learning, and information theory. It offers researchers a way to evaluate how well a model predicts language, which is central to everything from autocomplete engines to intelligent virtual assistants.

Also Read- How to Use AI for Peer Review Feedback | Best AI Tools 2025